Felix Hill

Research Scientist, DeepMind, London

Deep Learning for Language Processing

This course was first taught for MPhil Students at Cambridge University Computer Lab in 2018, by Stephen Clark and Felix Hill with guest lectures from the brilliant Ed Grefenstette and Chris Dyer.

The course gave a basic introduction to artificial neural networks, including the sometimes overlooked question of why these are appropriate models for language processing. We then covered some more advanced topics, finishing with some of the embodied and situated language learning research done at DeepMind.

Lectures and slides

- Introduction to Neural Networks for NLP (Clark) slides

- Feedforward Neural Networks for NLP (Clark) slides

- Training and Optimization (Clark) slides

- Models for leanring Word Embeddings (Hill) slides

- Recurrent Neural Networks (Hill) slides

- Tensorflow (Clark) slides

- Long Short Term Memory (Hill) slides

- Conditional Language Modeling (Dyer) slides

- Better Conditional Language Modeling (Dyer) slides

- Machine Comprehension (Grefenstette) slides

- Convolutional Neural Networks (Hill) slides

- Sentence Representations (Grefenstette) slides

- Sentence Representations (Hill) slides

- Image Captioning (Clark) slides

- Situated Language Learning (Hill) slides

Practical exercises and code

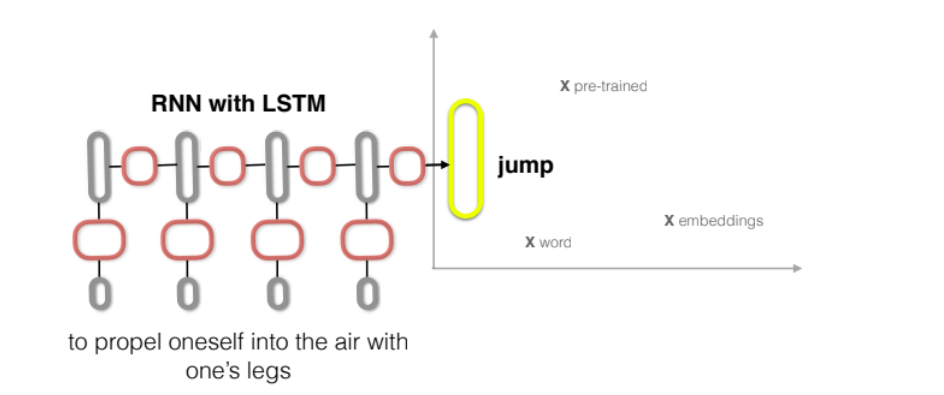

As a practical exercise, students had to experiment with training neural network models to map from a dictionary definitions to a distributed representation of the work that definition defines, as explained in the paper Learning to Understand Phrases by Embedding the Dictionary.

If you want to try it yourself, you could start with the code here perhaps following some of the instructions here. The dictionary data (described in those instructions) can be found here. Note that for assorted reasons out of our control, this is not exactly the same data as used in the experiments in the paper, so your results won’t be directly comparable with the results reported there.